What is OpenAI?

OpenAI is a research company that focuses on artificial intelligence (AI) and machine learning. Originally founded in 2015, OpenAI’s goal was to “advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.”

They pivoted in 2019 from a non-profit to a structure they call “capped” for-profit. The for-profit company, named OpenAI LP, was created to secure additional funding of $1 billion from Microsoft. The for-profit is still controlled by the non-profit called OpenAI Inc.

DigitalOcean provides cloud products for every stage of your journey. Get started with $200 in free credit!

What OpenAI APIs are available?

GPT-3

The GPT-3.x language models are adept at a range of text-related generation and transform use cases including copywriting, summarization, parsing unstructured text, classification, and translation.

Pricing has recently dropped as their engineers find ways to run their model more efficiently and pass those savings on to us! The model is pay-as-you-go and pricing ranges from $0.0004 to $0.1200 per 1k tokens (~750 words) based on the model you use.

New accounts receive $5 in free credit that can be used during the first 3 months, which is great for experimenting. In my first month of regularly I didn’t even reach $1 in usage fees!

GPT4 is now in limited beta. You can join the waitlist here: https://openai.com/waitlist/gpt-4-api

DALL·E

In January 2021, OpenAI introduced DALL·E, their state-of-the-art text-to-image model. In November 2022 they finally made it available as a public beta.

Pricing ranges from $0.016/image for 256×256 resolution to $0.020/image for 1024×1024 images. These can also take advantage of the new account credit.

Codex

Released in August 2021, Codex is a descendent of GPT-3 that translates natural language to code in over a dozen programming languages including JavaScript, TypeScript, Go, Perl, PHP, Ruby, Swift, SQL, and even Shell.

Some example usages include turning comments into code (magic!), contextual next line and full function completion, applicable API and library discovery, and rewriting code to improve performance.

API access is finally out of the waitlisted private beta and now in limited beta. It can be used for free during this initial period with rate limits of 20 requests per minute (RPM) and 40,000 tokens per minute (TPM).

It also powers GitHub Copilot, so you can try it there as well. You can also watch a pretty impressive demo of it in use.

ChatGPT

In November 2022, OpenAI released ChatGPT; a model trained explicitly for having conversational interactions. It retains the context of ongoing dialog allowing for follow-up questions and a chat-like experience.

It also now has an official API, and is their most affordable yet, priced at just $0.002 per 1k tokens, which is 10x cheaper than their existing GPT-3.5 models.

Whisper

Released in September 2022, Whisper is a open-source neural net speech-to-text model trained on a wide variety of audio data and is capable of performing multiple tasks, such as multilingual speech recognition, speech translation, and language identification.

It can be run directly from the open-source code and models, but OpenAI has also recently released the Whisper API that supports both transcription and translation features, priced at $0.006 / minute.

Who is already building on OpenAI’s API?

OpenAI’s release of APIs to access these machine learning models has resulted in a wave of new startups and service offerings. The AI Writing Assistants category alone has over 100+ companies, with more launching every month.

Here are a few highlights of companies and products using these APIs in some form:

Notion has announced that they are integrating AI text generation features into their note-taking and productivity software. This functionality is now generally available and brings the power of artificial intelligence directly into your Notion workspace.

GitHub Copilot is built on Codex and uses the context in your editor to automatically generate whole lines of code or even entire functions.

Microsoft is bringing DALL·E to a new graphic design app called Designer, allowing users to create professional quality social media posts, invitations, digital postcards, graphics, and more. They are also integrating it into Bing and Microsoft Edge with Image Creator enabling users to create new images if web results don’t return what they’re looking for.

Duolingo uses GPT-3 to provide French grammar correction functionality. An internal study they conducted showed that the use of this feature leads to improved second-language writing skills.

Viable uses language models, including GPT-3, to analyze customer feedback and generate summaries and insights, helping businesses better and more quickly understand what customers are telling them.

Keeper Tax helps freelancers find tax-deductible expenses automatically by using GPT-3 to parse and interpret their bank statements into usable transaction information.

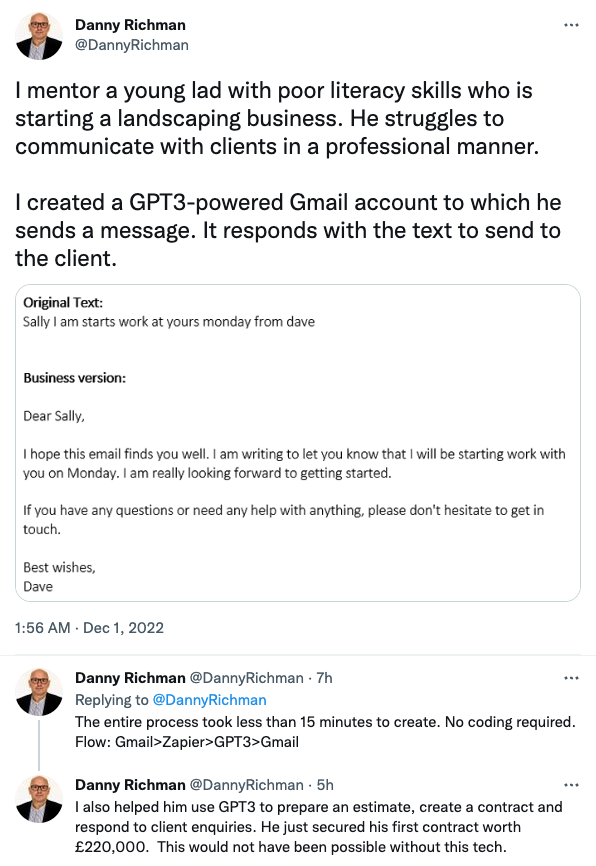

Individuals are also taking advantage of this new easy-to-use API access to language models to do some good in the world. Here’s a great example:

How can you use the OpenAI APIs?

Getting started with OpenAI is straightforward:

Step 1 — Create a new OpenAI account

Go to https://platform.openai.com/signup and create your account.

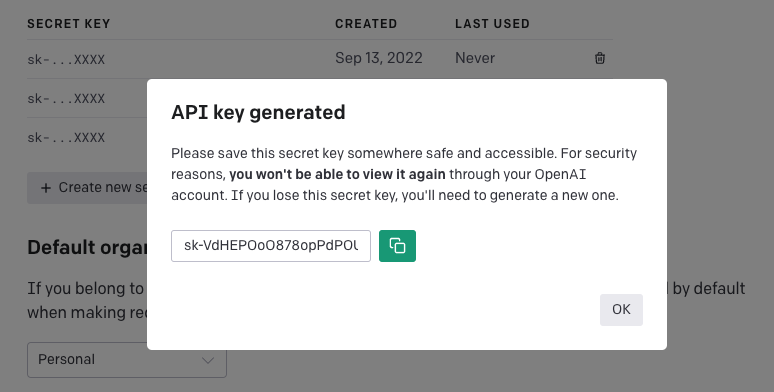

Step 2 — Generate an API key

Visit https://platform.openai.com/account/api-keys and click “Create new secret key”. Make sure and save this newly generated key somewhere safe, since you won’t be able to see the full key again once you close the modal.

Reminder: your API key is a secret, so don’t share it with anyone, use it in client-side code, include it in blog posts, or check it into any public GitHub repositories!

Step 3 — Make a test call!

For simplicity, I’ll use

curlin these examples, but you could make these calls using Postman, or via code using any of the language-specific libraries offered by OpenAI or the community: https://platform.openai.com/docs/libraries

Make this simple test request to get a specific model’s details, using your API key:

curl https://api.openai.com/v1/models/text-davinci-003 \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY"And if everything is working, you should get back a 200 response with JSON results like this:

{

"id": "text-davinci-003",

"object": "model",

"created": 1669599635,

"owned_by": "openai-internal",

"permission": [

{

"id": "modelperm-TULcdyRYjFvZGfo1snLsisCV",

"object": "model_permission",

"created": 1669678785,

"allow_create_engine": false,

"allow_sampling": true,

"allow_logprobs": true,

"allow_search_indices": false,

"allow_view": true,

"allow_fine_tuning": false,

"organization": "*",

"group": null,

"is_blocking": false

}

],

"root": "text-davinci-003",

"parent": null

}Using GPT-3

To prompt the model to respond with “Hello World”, use the completions endpoint like this:

curl https://api.openai.com/v1/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY" \

-d '{

"model": "text-davinci-003",

"prompt": "Say Hello World",

"temperature": 0,

"n": 1,

"max_tokens": 5

}'You’ll get a response with the output text in the choices array, in this case as the only element since I asked for a single result via the n parameter.

{

"id": "cmpl-6DeO...",

"object": "text_completion",

"created": 1669700523,

"model": "text-davinci-003",

"choices": [

{

"text": "\n\nHello World!",

"index": 0,

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 3,

"completion_tokens": 5,

"total_tokens": 8

}

}A couple of parameters of note:

model – There are several models to pick from, some being less expensive per token than others. You can read more about them and their strengths and weaknesses here: https://platform.openai.com/docs/models/gpt-3

prompt – This is the important bit, and where your creativity is required to guide the language model toward the output you expect. Here are some great examples to get you started in learning what is possible and how to achieve your desired results: https://platform.openai.com/examples

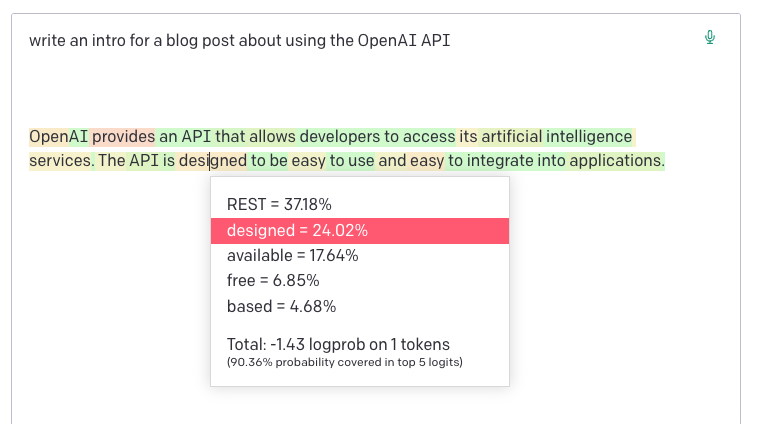

temperature – In AI writing assistant products you’ll often see this called “creativity”. On a scale of 0 to 1, 0 being defined and tracking the prompt closely and 1 being “take lots of risks”. I used 0 in my prompt because I wanted it to return exactly what I asked for. Using higher values I found that I’d be less like to get “Hello World” back. For most uses, I’d recommend a 0.7 as a good starting point. You can read more about sampling temperature to learn about the concept.

n – This controls how many distinct completions to return from the prompt. Thus the choices key for the results array in the JSON response. Note: this number will act as a multiplier for token use, so keep an eye on this to manage your budget.

max_tokens – This tells the model how much content (tokens) to generate. There is an upper limit for each model, most being 2048 with newer models being 4096. I usually keep it around 256 or 512 for most things, but for long-form content, you’ll need to bump it up.

BTW, instead of making direct API calls, you can also use the GPT-3 Playground which has a simple web interface for working with the API, using your existing account. It also enables some other neat features like colorized highlighting of token probabilities.

Using DALL·E

The primary endpoint for creating images is, not surprisingly, images/generations.

curl https://api.openai.com/v1/images/generations \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"prompt": "An illustration of a computer programmer writing code for a tutorial blog post",

"n": 1,

"size": "512x512"

}'The response will contain an array of generated image URLs which you can then open to view them. In this case, I’ve set the n parameter to 1, which asks for just a single generation.

{

"created": 1668720514,

"data": [

{

"url": "https://oaidalleapiprodscus.blob.core.../img-odP0XBN8rHaARDz7TdJMrXnJ.png?..."

}

]

}My first prompt asked for “a pencil sketch…”, and then I asked for “an illustration…”. I wouldn’t say these are exactly selfies, but not too far off!

You might notice a couple of the weaknesses of this current generation of text-to-image AI models, which also affects Midjourney and Stable Diffusion: trouble reproducing hands and text.

A couple of parameters of note:

prompt – The text description of the image you’d like to generate. Again, your creativity is required here to guide the model toward the output you want. For some excellent example prompts and the resulting images, do check out PromptHero.

n – Like the GPT-3 API, this controls how many variations to return from the prompt. Again, this number will act as a multiplier for image generations, so be conscience of this to manage your budget.

size – Dimensions of the generated images. Currently, the options are limited to 256x256, 512x512, or 1024x1024.

Using Codex

The Codex API is finally available to test in a limited beta!

You use the same completions endpoint that GPT-3 uses, but you select one of the available Codex models, currently code-davinci-002 or code-cushman-001 (almost as capable as code-davinci-002, but faster).

Update from OpenAI:

On March 23rd [2023], we will discontinue support for the Codex API. All customers will have to transition to a different model.

Codex was initially introduced as a free limited beta in 2021, and has maintained that status to date. Given the advancements of our newest GPT-3.5 models for coding tasks, we will no longer be supporting Codex and encourage all customers to transition to GPT-3.5-Turbo.

GPT-3.5-Turbo is the most cost effective and performant model in the GPT-3.5 family. It can both do coding tasks while also being complemented with flexible natural language capabilities.

Here’s an example request to create a one line version of some given code:

rabbits.forEach((rabbit) => {

warren.push(rabbit);

});curl https://api.openai.com/v1/completions

-H "Content-Type: application/json" -H "Authorization: Bearer YOUR_API_KEY"

-d '{

"model": "code-davinci-002",

"prompt": "Use list comprehension to convert this into one line of JavaScript:\n\nrabbits.forEach((rabbit) => {\n warren.push(rabbit);\n});\n\nJavaScript one line version:",

"temperature": 0,

"max_tokens": 60,

"top_p": 1.0,

"frequency_penalty": 0.0,

"presence_penalty": 0.0,

"stop": [";"]

}'With a response being a slightly tidier way to get the bunnies home:

{

"id": "cmpl-6piblB1gRXtJbHqqO6FJGWGpcJ4o1",

"object": "text_completion",

"created": 1677784221,

"model": "code-davinci-002",

"choices": [

{

"text": "\n\nrabbits.forEach((rabbit) => warren.push(rabbit))",

"index": 0,

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 45,

"completion_tokens": 22,

"total_tokens": 67

}

}rabbits.forEach((rabbit) => warren.push(rabbit))You can also play with Codex using the Javascript Sandbox, or in their full-featured Playground by selecting the available Codex models directly.

Using ChatGPT

March 1st 2023 Update: The official ChatGPT API is here!

The new endpoint can be found at v1/chat/completions, and accessed like so:

curl https://api.openai.com/v1/chat/completions

-H "Authorization: Bearer YOUR_API_KEY"

-H "Content-Type: application/json"

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "What is the OpenAI mission?"}]

}'Which will return a response like this:

{

"id": "chatcmpl-6p5...",

"object": "messages",

"created": 1677693600,

"model": "gpt-3.5-turbo",

"choices": [

{

"index": 0,

"finish_reason": "stop",

"messages": [

{

"role": "assistant",

"content": "OpenAI's mission is to ensure that artificial general intelligence benefits all of humanity."

}

]

}

],

"usage": {

"prompt_tokens": 20,

"completion_tokens": 18,

"total_tokens": 38

}

}You can also use ChatGPT online with your account at chat.openai.com.

The ChatGPTgpt-3.5-turbo model performs at a similar capability to the the GPT-3 text-davinci-003 model but at only 10% the price per token ($0.002 / 1K tokens), so consider it for most use cases. It might also be worth porting and retesting any existing GPT-3 prompts you already use in your tools or systems.

GPT models have typically been fed unstructured text. In contrast, ChatGPT models use a structured format, known as Chat Markup Language (ChatML). This format is composed of a series of messages, with each message having a header (currently only indicating who said it but with the potential to include more metadata) and content (currently only text but with the potential to include other data types).

Note: The previous reversed-engineered libraries I mentioned to access the unofficial API from the initial research preview of ChatGPT are still available.

They have been updated to enable use of both the new official API (paid) and the unofficial API (still available and free, for now at least):

Node:

https://github.com/transitive-bullshit/chatgpt-api

Python:

https://github.com/acheong08/ChatGPT

https://github.com/rawandahmad698/PyChatGPT

Using Whisper

Being open-source, one option is to self-host and run the Whisper code and models directly, for free. It supports both command-line and Python code usage by default.

If you want to avoid the complexity and overhead of running it yourself, OpenAI has also made available an API for Whisper that supports both transcription and translation functionality, and uses the large-v2 model.

A sample speech-to-text transcription API request might look like:

curl https://api.openai.com/v1/audio/transcriptions \

-H "Authorization: Bearer YOUR_API_KEY" \

-H "Content-Type: multipart/form-data" \

-F model="whisper-1" \

-F file="@/path/to/file/openai.mp3"With the response containing the transcribed text from the audio:

{

"text": "Imagine the wildest idea that you've ever had, and you're curious about how it might scale to something that's a 100, a 1,000 times bigger..."

}File uploads are currently limited to 25 MB and supports the following input file types: mp3, mp4, mpeg, mpga, m4a, wav, and webm.

Although the model was trained on 98 languages, the following list are the ones that have a word error rate (WER) of less than 50%, which is the industry standard level of accuracy for speech-to-text models. Results for other languages may be returned, but the quality will be poor.

Afrikaans, Arabic, Armenian, Azerbaijani, Belarusian, Bosnian, Bulgarian, Catalan, Chinese, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, Galician, German, Greek, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Kannada, Kazakh, Korean, Latvian, Lithuanian, Macedonian, Malay, Marathi, Maori, Nepali, Norwegian, Persian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Swahili, Swedish, Tagalog, Tamil, Thai, Turkish, Ukrainian, Urdu, Vietnamese, and Welsh.

What are some benefits of using OpenAI APIs?

While there are plenty of software tools and services you can subscribe to that essentially wrap the OpenAI APIs and add domain-specific concepts and value on top, sometimes you might want to directly use the APIs yourself for experimentation, bulk processing, custom workflows, or integrating into your product (new or existing)… or simply to take advantage of the incredible cost savings!

And if working with the raw APIs directly isn’t something that works for your needs, interests, or skill level… you can always use one of those higher-level tools that have already integrated it for you:

GPT-3

There’s a new and growing list of GPT-3 AI writing tools maintained by Last Writer: AI Writing Assistant Directory

DALL·E 2

Join the waitlist for Microsoft Designer

Try the Bing Image Creator Preview (if it’s available in your region yet)

OpenAI also has its own DALL·E preview app that you can use as a simple UI to their model and uses your OpenAI account.

Codex

Being in a waitlist-gated private beta, your best option currently is to use GitHub Copilot.

DigitalOcean provides cloud products for every stage of your journey. Get started with $200 in free credit!